14.4 – Randomized block design

Introduction

Randomized Block Designs and Two-Factor ANOVA

In previous lectures, we have introduced you to the standard factorial ANOVA, which may be characterized as being crossed, balanced, and replicated. We expect additional factors (covariates) may contribute to differences among our experimental units, but rather than testing them — which would increase need for additional experimental units because of increased number of groups to test — we randomize our subjects. Randomization is intended to disrupt trends of confounding variables (aka covariates). If the resulting experiment has missing values (see Chapter 5), then we can say that the design is partially replicated; if only one observation is made per group, then the design is not replicated — and perhaps, not very useful!!

A special type of Two-factor ANOVA which includes a “blocking” factor and a treatment factor.

Randomization is one way to control for “uninteresting” confounding factors. Clearly, there will be scenarios in which randomization is impossible. For example, it is impossible to randomly assign subjects to The blocking factor is similar to the 10.3 – Paired t-test. In the paired t-test we had two individuals or groups that we paired (e.g. twins). One specific design is called the Randomized Block Design and we can have more than 2 members in the group. We arrange the experimental units into similar groups, i.e., the blocking factor. Examples of blocking factors may include day (experiments are run over different days), location (experiments may be run at different locations in the laboratory), nucleic acid kits (different vendors), operator (different assistants may work on the experiments), etcetera.

In general we may not be directly interested in the blocking factor. This blocking factor is used to control some factor(s) that we suspect might affect the response variable. Importantly, this has the effect of reducing the sums of squares by an amount equal to the sums of squares for the block. If variability removed by the blocking is significant, Mean Square Error (MSE) will be smaller, meaning that the F for treatment will be bigger — meaning we have a more powerful ANOVA than if we had ignored the blocking.

Statistical Testing in Randomized Block Designs

“Blocks” is a Random Factor because we are “sampling” a few blocks out of a larger possible number of blocks. Treatment is a Fixed Factor, usually.

The statistical model is

![]()

The Sources of Variation are simpler than the more typical Two-Factor ANOVA because we do not calculate all the sources of variation – the interaction is not tested! (Table 1).

Table 1. Sources of variation for a two-way ANOVA, randomized block design

|

Sources of Variation & Sum of Squares

|

DF

|

Mean Squares

|

![]()

Critical Value F0.05(2), (a – 1), (Total DF – a – b)

In the exercise example above: Factor A = exercise or management plan.

Notice that we do not look at the interaction MS or the Blocking Factor (typically).

Learn by doing

Rather than me telling you, try on your own. We’ll begin with a worked example, then proceed to introduce you to three problems. Click here (Ch 14.8)for general discussion of Rcmdr and linear models for models other than the standard 2-way ANOVA (Chapter 14.8).

Worked example

Wheel running by mice is a standard measure of activity behavior. Even wild mice will use wheels (Meijer and Roberts 2014). For example, we conduct a study of family parity to see if offspring from the first, second, or third sets of births from wheel-running behavior in mice (total revs per 24 hr period). Each set of offspring from a female could be treated as a block. Data are for 3 female offspring from each pairing. This type of data set would look like this:

Table 2. Wheel running behavior by three offspring from each of three birth cohorts among four maternal sets (moms).

|

Revolutions of wheel per 24-hr period

|

||||

|

Block

|

Dam 1

|

Dam 2

|

Dam 3

|

Dam 4

|

|

b1

|

1100

|

1566

|

945

|

450

|

|

b1

|

1245

|

1478

|

877

|

501

|

|

b1

|

1115

|

1502

|

892

|

394

|

|

b2

|

999

|

451

|

644

|

605

|

|

b2

|

899

|

405

|

650

|

612

|

|

b2

|

745

|

344

|

605

|

700

|

|

b3

|

1245

|

702

|

1712

|

790

|

|

b3

|

1300

|

612

|

1745

|

850

|

|

b3

|

1750

|

508

|

1680

|

910

|

Thus, there were nine offspring for each female mouse (Dam1 – Dam4), three offspring per each of three litters of pups. The litters are the blocks. We need to get the data stacked to run in R. I’ve provided the dataset for you, scroll to end of this page or click here.

Question 1. Describe the problem and identify the treatment and blocking factors.

Answer. Each female has three litters. We’re primarily interested in genetics (and maternal environment) of wheel running behavior, which is associated with the moms (Treatment factor). The questions is whether there is an effect of birth parity on wheel running behavior. Offspring of a first-time mother may experience different environment than offspring of experienced mother. In this case, parity effects is an interesting question, nevertheless, blocking is the appropriate way to handle this type of problem.

Question 2. What is the statistical model?

Answer. Response variable, Y, is wheel running. Let α the effect of Dam and β the birth cohorts (i.e., the blocking effect).

![]()

Question 3. Test the model.

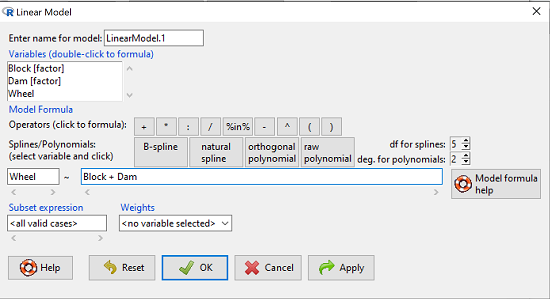

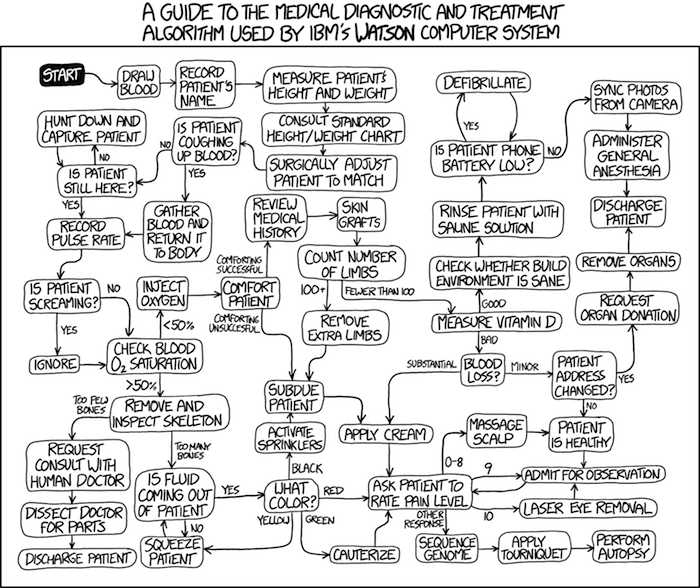

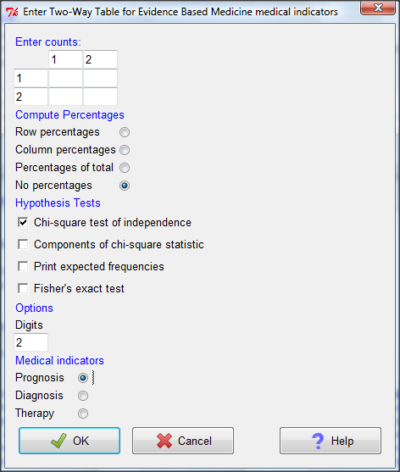

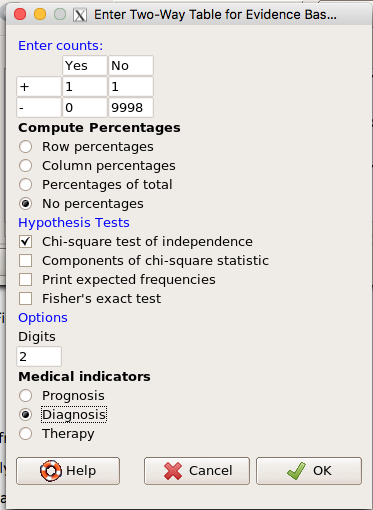

Answer. We fit the main effects, Dam and Block Fig 1.

![]()

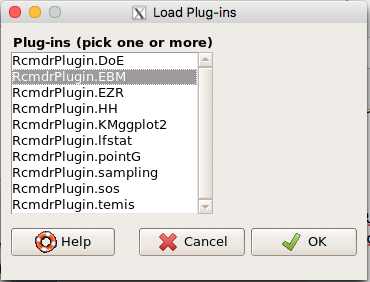

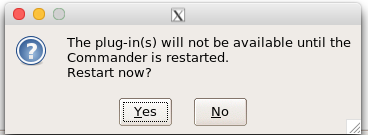

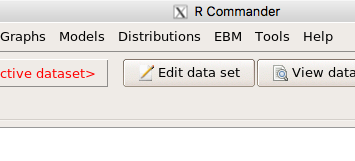

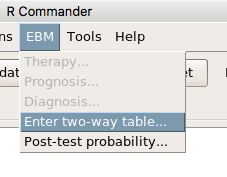

Rcmdr: Statistics → Fit models → Linear model

Figure 1. Screenshot Rcmdr Linear Model menu.

then run the ANOVA summary to get the ANOVA table. Rcmdr: Models → Hypothesis tests → ANOVA table.

Output

Anova Table (Type II tests)

Response: Wheel

Sum Sq Df F value Pr(>F)

Dam 1467020 3 4.4732 0.01036 *

Block 1672166 2 7.6482 0.00207 **

Residuals 3279544 30

Question 4. Conclusions?

Answer. The null hypotheses are:

Treatment factor: Offspring of the different dams have same wheel running activity of offspring.

Blocking factor: No effect of litter parity on wheel running activity of offspring.

Both the treatment factor (p = 0.01036) and the blocking factor (p = 0.00207) were statistically significant.

Try on your own

Problem 1.

Or we might want to measure the Systolic Blood Pressure of individuals that are on different exercise regimens. However, we are not able to measure all the individuals on the same day at the same time. We suspect that time of day and the day of the year might effect an individuals blood pressure. Given this constraint, the best research design in this circumstance is to measure one individual on each exercise regime at the same time. These different individuals will then be in the same “block” because they share in common the time that their blood pressure was measured. This type of data set would look like this (Table 2):

Table 2. Simulated blood pressure of five subjects on three different exercise regimens.†

|

Subject

|

No

Exercise |

Moderate Exercise

|

Intense Exercise

|

|

1

|

120

|

115

|

114

|

|

2

|

135

|

130

|

131

|

|

3

|

115

|

110

|

109

|

|

4

|

112

|

107

|

106

|

|

5

|

108

|

103

|

102

|

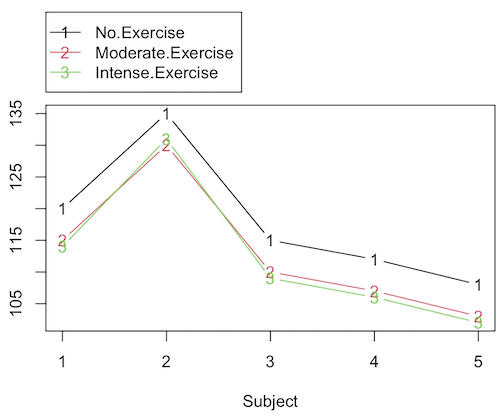

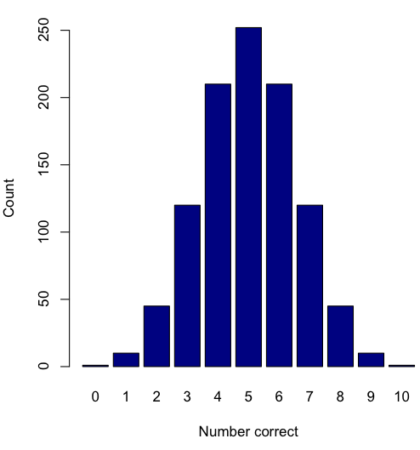

Let’s make a line graph to help us visualize trends (Fig 2).

Figure 2. Line graph of data presented in Table 2.

Question 1. Describe the problem and identify the treatment and blocking factors.

Question 2. What is the statistical model?

Question 3. Test the model.

Question 4. Conclusions?

†You’ll need to arrange the data like the data set for the worked example.

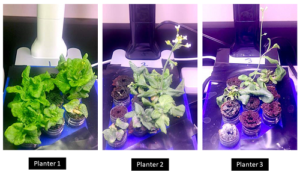

Problem 2.

Another example in conservation biology or agriculture. There may be three different management strategies for promoting the recovery of a plant species. A good research design would be to choose many plots of land (blocks) and perform each treatment (management strategy) on a portion of each plot of land (block). A researcher would start with an equal number of plantings in the plots and see how many grew. The plots of land (blocks) share in common many other aspects of that particular plot of land that may effect the recovery of a species.

Table 3. Growth of plants in 5 different plots subjected to one of three management plans (simulated data set).†

|

Plot No.

|

No Management Used

|

Management

Plan 1 |

Management

Plan 2 |

|

1

|

0

|

11

|

14

|

|

2

|

2

|

13

|

15

|

|

3

|

3

|

11

|

19

|

|

4

|

4

|

10

|

16

|

|

5

|

5

|

15

|

12

|

†You’ll need to arrange the data the same arrangement as for the worked example.

These are examples of Two-Factor ANOVA but we are usually only interested in the treatment Factor. We recognize that the blocking factor may contribute to differences among groups and so wish to control for the fact that we carried out the experiments at different times (e.g., seasons) or at different locations (e.g., agriculture plots)

Question 1. Describe the problem and identify the treatment and blocking factors. Make a line graph to help visualize.

Question 2. What is the statistical model?

Question 3. Test the model.

Question 4. Conclusions?

Repeated-Measures Experimental Design

If multiple measures are taken on the same individual, then we have a repeated-measures experiment. This is a Randomized Block Design. In other words, each animal gets all levels of a treatment (assigned randomly). Thus, samples (individuals) are not independent and the analysis needs to take this into account. Just like for paired-T tests, one can imagine a number of experiments in biomedicine that would conform to this design.

Problem 3.

The data are total blood cholesterol levels for 7 individuals given 3 different drugs (from example given in Zar 1999, Ex 12.5, pp. 257-258).

Table 5. Repeated measures of blood cholesterol levels of seven subjects on three different drug regimens.†

|

Subjects

|

Drug1

|

Drug2

|

Drug3

|

|

1

|

164

|

152

|

178

|

|

2

|

202

|

181

|

222

|

|

3

|

143

|

136

|

132

|

|

4

|

210

|

194

|

216

|

|

5

|

228

|

219

|

245

|

|

6

|

173

|

159

|

182

|

|

7

|

161

|

157

|

165

|

†You’ll need to arrange the data like the data set for the worked example.

Question 1: Is there an interaction term in this design? Make a line graph to help visualize.

Question 2: Are individuals a fixed or a random effect?

Question 2. What is the statistical model?

Question 3. Test the model. Note that we could have done the experiment with 21 randomly selected subjects and a one-factor ANOVA. However, the repeated measures design is best IF there is some association (“correlation”) between the data in each row. The computations are identical to the randomized block analysis.

Question 4. Conclusions?

Problem 4.

Figure 3. Juvenile garter snake, image from GetArchive, public domain.

Here is a second example of a repeated measures design experiment. Garter snakes (Fig 3) respond to odor cues to find prey. Snakes use their tongues to “taste” the air for chemicals, and flick their tongues rapidly when in contact with suitable prey items, less frequently for items not suitable for prey. In the laboratory, researchers can test how individual snakes respond to different chemical cues by presenting each snake with a swab containing a particular chemical. The researcher then counts how many times the snake flicks its tongue in a certain time period (data presented p. 301, Glover and Mitchell 2016).

Table 6. Tongue flick counts of naïve newborn snakes to extracts†

|

Snake

|

Control (dH2O)

|

Fish mucus

|

Worm mucus

|

|

1

|

3

|

6

|

6

|

|

2

|

0

|

22

|

22

|

|

3

|

0

|

12

|

12

|

|

4

|

5

|

24

|

24

|

|

5

|

1

|

16

|

16

|

|

6

|

2

|

16

|

16

|

†You’ll need to arrange the data like the data set for the worked example.

Question 1. Describe the problem and identify the treatment and blocking factors. Make a line graph to help visualize.

Question 2. What is the statistical model?

Question 3. Test the model.

Question 4. Conclusions?

Additional questions

- The advantage of a randomized block design over a completely randomized design is that we may compare treatments by using ________________ experimental units.

A. randomly selected

B. the same or nearly the same

C. independent

D. dependent

E. All of the above - Which of the following is NOT found in an ANOVA table for a randomized block design?

A. Sum of squares due to interaction

B. Sum of squares due to factor 1

C. Sum of squares due to factor 2

D. None of the above are correct - A clinician wishes to compare the effectiveness of three competing brands of blood pressure medication. She takes a random sample of 60 people with high blood pressure and randomly assigns 20 of these 60 people to each of the three brands of blood pressure medication. She then measures the decrease in blood pressure that each person experiences. This is an example of (select all that apply)

A. a completely randomized experimental design

B. a randomized block design

C. a two-factor factorial experiment

D. a random effects or Type II ANOVA

E. a mixed model or Type III ANOVA

F. a fixed effects model or Type I ANOVA - A clinician wishes to compare the effectiveness of three competing brands of blood pressure medication. She takes a random sample of 60 people with high blood pressure and randomly assigns 20 of these 60 people to each of the three brands of blood pressure medication. She then measures the blood pressure before treatment and again 6 weeks after treatment for each person. This is an example of (select all that apply)

A. a completely randomized experimental design

B. a randomized block design

C. a two-factor factorial experiment

D. a random effects or Type II ANOVA

E. a mixed model or Type III ANOVA

F. a fixed effects model or Type I ANOVA

Data sets used in this page

Problem 1 data set

| Block | Dam | Wheel |

| B1 | D1 | 1100 |

| B1 | D2 | 1566 |

| B1 | D3 | 945 |

| B1 | D4 | 450 |

| B1 | D1 | 1245 |

| B1 | D2 | 1478 |

| B1 | D3 | 877 |

| B1 | D4 | 501 |

| B1 | D1 | 1115 |

| B1 | D2 | 1502 |

| B1 | D3 | 892 |

| B1 | D4 | 394 |

| B2 | D1 | 999 |

| B2 | D2 | 451 |

| B2 | D3 | 644 |

| B2 | D4 | 605 |

| B2 | D1 | 899 |

| B2 | D2 | 405 |

| B2 | D3 | 650 |

| B2 | D4 | 612 |

| B2 | D1 | 745 |

| B2 | D2 | 344 |

| B2 | D3 | 605 |

| B2 | D4 | 700 |

| B3 | D1 | 1245 |

| B3 | D2 | 702 |

| B3 | D3 | 1712 |

| B3 | D4 | 790 |

| B3 | D1 | 1300 |

| B3 | D2 | 612 |

| B3 | D3 | 1745 |

| B3 | D4 | 850 |

| B3 | D1 | 1750 |

| B3 | D2 | 508 |

| B3 | D3 | 1680 |

| B3 | D4 | 910 |

Chapter 14 contents

12.3 – Fixed effects, random effects, and agreement

Introduction

Within discussions of one-way ANOVA models the distinction between two general classes of models needs to be made clear by the researcher. The distinction lies in how the levels of the factor are selected. If the researcher selects the levels, then the model is a Fixed Effects Model, also called a Model I ANOVA. On the other hand, if the levels of the factor were selected by random sampling from all possible levels of the factor, then the model is a Random Effects Model, also called a Model II ANOVA. This page is about such models and I’ll introduce the intraclass correlation coefficient, abbreviated as ICC, as a way to illustrate applications of Model II ANOVA.

Here’s an example to help the distinction. Consider an experiment to see if over the counter painkillers are as good as prescription pain relievers at reducing numbers of migraines over a six week period. The researcher selects Tylenol®, Advil®, Bayer® Aspirin, and Sumatriptan (Imitrex®), the latter an example of a medicine only available by prescription. This is clearly an example of fixed effects; the researcher selected the particular medicines for use.

Random effects, in contrast, implies that the researcher draws up a list of all over the counter pain relievers and draws at random three medicines; the researcher would also randomly select from a list of all available prescription medicines.

Fixed effects are probably the more common experimental approaches. To be complete, there is a third class of ANOVA called a Mixed Model or Model III ANOVA, but this type of model only applies to multidimensional ANOVA (e.g., two-way ANOVA or higher), and we reserve our discussion of the Model III until we discuss multidimensional ANOVA (Table 1).

Table 1. ANOVA models

| ANOVA model | Treatments are |

| I | Fixed effects |

| II | Random effects |

| III | Mixed, both fixed & random effects |

Although the calculations for the one-way ANOVA under Model I or Model II are the same, the interpretation of the statistical significance is different between the two.

In Model I ANOVA, any statistical difference applies to the differences among the levels selected, but cannot be generalized back to the population. In contrast, statistical significance of the Factor variable in Model II ANOVA cannot be interpreted as specific differences among the levels of the treatment factor, but instead, apply to the population of levels of the factor. In short, Model I ANOVA results apply only to the study, whereas Model II ANOVA results may be interpreted as general effects, applicable to the population.

This distinction between fixed effects and random effects can be confusing, but it has broad implications for how we interpret our results in the short-term. This conceptual distinction between how the levels of the factor are selected also has general implications for our ability to acquire generalizable knowledge by meta-analysis techniques (Hunter and Schmidt 2000). Often we wish to generalize our results: we can do so only if the levels of the factor were randomly selected in the first place from all possible levels of the factor. In reality, this may not often be the case. It is not difficult to find examples in the published literature in which the experimental design is clearly fixed effects (i.e., the researcher selected the treatment levels for a reason), and yet in the discussion of the statistical results, the researcher will lapse into generalizations.

Random Effects Models and agreement

Model II ANOVA is common in settings in which individuals are measured more than once. For example, in behavioral science or in sports science, subjects are typically measured for the response variable more than once over a course of several trials. Another common setting of Model II ANOVA is where more than one raters are judging an event or even a science project. In all of these cases what we are asking is about whether or not the subjects are consistent, in other words, we are asking about the precision of the instrument or measure.

In the assessment of learning by students, for example, different approaches may be tried and the instructor may wish to investigate whether the interventions can explain changes in test scores. To assess instrument validity — an item score from a grading rubric (Goertzen and Klaus 2023), tumor budding as biomarker for colorectal cancer (Bokhorst et al 2020) — agreement or concordance among two or more raters or judges (inter-rater reliability) needs to be established (same citations and references therein). For binomial data, we would be tempted to use the phi coefficient, Is it actually reliable Cohen’s kappa (two judges) or Fleiss Kappa (two or more judges) is a better tool. Phi coefficient and both assessment scores were introduced in Chapter 9.2.

For ratio scale data, agreement among scores of two or more scores from judges There is an enormous number of articles on reliability measures in the social sciences and you should be aware of a classical paper on reliability by Shrout and Fleiss (1979) (see also McGraw and Wong, 1996). Both the ICC and the Pearson product moment correlation, r, which we will introduce in Chapter 16, are measures of strength of linear association between two ratio scale variables (Jinyuan et al 2016). But ICC is more appropriate for association between repeat measures of the same thing, e.g., repeat measures of running speed or agreement between judges. In contrast, the product moment correlation can be used to describe association between any two variables, e.g., between repeat measures of running speed, but also between say running speed and maximum jumping height. The concept of repeatability of individual behavior or other characteristics is also a common theme in genetics and so you should not be surprised to learn that the concept actually traces to RA Fisher and his invention of ANOVA. Just as in the case of the sociology literature, there are many papers on the use and interpretation of repeatability in the evolutionary biology literature (e.g., Lessels and Boag 1987; Boake 1989; Falconer and Mackay 1996; Dohm 2002; Wolak et al 2012).

There are many ways to analyze these kinds of data but a good way is to treat this problem as a one-way ANOVA with Random Effects. Thus, the Random Effects model permits the partitioning of the variation in the study into two portions: the amount that is due to differences among the subjects or judges or intervention versus the amount that is due to variation within the subjects themselves. The Factor is the Subjects and the levels of the factor are how ever many subjects are measured twice or more for the response variable.

If the subjects performance is repeatable, then the Mean Square Between (Among) Subjects, ![]() , component will be greater than the Mean Square Error component,

, component will be greater than the Mean Square Error component, ![]() , of the model. There are many measures of repeatability or reliability, but the intraclass correlation coefficient or ICC is one of the most common. The ICC may be calculated from the Mean Squares gathered from a Random Effects one-way ANOVA. ICC can take any value between zero and one.

, of the model. There are many measures of repeatability or reliability, but the intraclass correlation coefficient or ICC is one of the most common. The ICC may be calculated from the Mean Squares gathered from a Random Effects one-way ANOVA. ICC can take any value between zero and one.

![]()

where

![]()

and

![]()

B and W refers to the among group (between or among groups mean square) and the within group components of variation (error mean square), respectively, from the ANOVA, MS refers to the Mean Squares, and k is the number of repeat measures for each experimental unit. In this formulation k is assumed to be the same for each subject.

By example, when a collection of sprinters run a race, if they ran it again, would the outcome be the same, or at least predictable? If the race is run over and over again and the runners cross the finish lines at different times each race, then much of the variation in performance times will be due to race differences largely independent of any performance abilities of the runners themselves and the Mean Square Error term will be large and the Between subjects Mean Square will be small. In contrast, if the race order is preserved race after race: Jenny is 1st, Ellen is 2nd, Michael is third, and so on, race after race, then differences in performance are largely due to individual differences. In this case, the Between-subjects Mean Square will be large as will the ICC, whereas the Mean Square for Error will be small, and so will the ICC.

Can the intraclass correlation be negative?

In theory, no. Values for ICC range between zero and one. The familiar Pearson product moment correlation, Chapter 16, takes any value between -1 and +1. However, in practice, negative values for ICC will result if ![]() .

.

In other words, if the within group variability is greater than the among group variability, then negative ICC are possible. The question is whether negative estimates are ever biologically relevant or, simply result of limitations of the estimation procedure. Small ICC values and few repeats increases the risk of negative ICC estimates. Thus, a negative ICC would be “simply a(n) “unfortunate” estimate (Liljequist et al 2019). However, there may be situations in which negative ICC is more than unfortunate. For example, repeatability, a quantitative genetics concept (Falconer and Mackay 1996), can be negative (Nakagawa and Schielzeth 2010).

![]()

Repeatability reflects the consistency of individual traits over time (or spatially, e.g., scales on on a snake), is defined as the ratio of within individual differences and among individuals differences. Thus, within individual variance is attributed to general environmental effects, ![]() , whereas variance among individuals is both genetic and environmental. Scenarios for which repeated measures on the same individual may occur under environmental conditions, termed specific environmental effects or

, whereas variance among individuals is both genetic and environmental. Scenarios for which repeated measures on the same individual may occur under environmental conditions, termed specific environmental effects or ![]() by Falconer and Mackay (1996), that negatively affect performance are not hard to imagine — it remains to be determined how common these conditions are and whether or not estimates of repeatability are negative.

by Falconer and Mackay (1996), that negatively affect performance are not hard to imagine — it remains to be determined how common these conditions are and whether or not estimates of repeatability are negative.

ICC Example

I extracted 15 data points from a figure about nitrogen metabolism in kidney patients following treatment with antibiotics (Figure 1, Mitch et al 1977). I used a web application called WebPlot Digitizer (https://apps.automeris.io/wpd/), but you can also accomplish this task within R via the digitize package. I was concerned about how steady my hand was using my laptop’s small touch screen, a problem that very much can be answered by thinking statistically, and taking advantage of the ICC. So, rather than taking just one estimate of each point I repeated the protocol for extracting the points from the figure three times generating a total of three points for each of the 15 data points or 45 points in all. How consistent was I?

Let’s look at the results just for three points, #1, 2, and 3.

In the R script window enter

points = c(1,2,3,1,2,3,1,2,3)

Change points to character so that the ANOVA command will treat the numbers as factor levels.

points = as.character(points) extracts = c(2.0478, 12.2555, 16.0489, 2.0478, 11.9637, 16.0489, 2.0478, 12.2555, 16.0489)

Make a data frame, assign to an object, e.g., “digitizer”

digitizer = data.frame(points, extracts)

The dataset “digitizer” should now be attached and available to you within Rcmdr. Select digitizer data set and proceed with the one-way ANOVA.

Output from oneway ANOVA command

Model.1 <- aov(extracts ~ points, data=digitizer)

summary(Model.1)

Df Sum Sq Mean Sq F value Pr(>F)

points 2 313.38895 156.69448 16562.49 5.9395e-12 ***

Residuals 6 0.05676 0.00946

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

numSummary(digitizer$extracts , groups=digitizer$points,

+ statistics=c("mean", "sd"))

mean sd data:n

1 2.04780000 0.0000000000 3

2 12.15823333 0.1684708085 3

3 16.04890000 0.0000000000 3

End R output. We need to calculate the ICC

![]()

I’d say that’s pretty repeatable and highly precise measurement!

But is it accurate? You should be able to disentangle accuracy from precision based on our previous discussion (Chapter 3.5), but now in the context of a practical way to quantify precision.

ICC calculations in R

We could continue to calculate the ICC by hand, but better to have a function. Here’s a crack at the function to calculate ICC along with a 95% confidence interval.

myICC <- function(m, k, dfN, dfD) {

testMe <- anova(m)

MSB <- testMe$"Mean Sq"[1]

MSE <- testMe$"Mean Sq"[2]

varB <- MSB - MSE/k

ICC <- varB/(varB+MSE)

fval <- qf(c(.025), df1=dfN, df2=dfD, lower.tail=TRUE)

CI = (k*MSE*fval)/(MSB+MSE*(k-1)*fval)

LCIR = ICC-CI

UCIR = ICC+CI

myList = c(ICC, LCIR, UCIR)

return(myList)

}

The user supplies the ANOVA model object (e.g., Model.1 from our example), k, which is the number of repeats per unit, and degrees of freedom for the among groups comparison (2 in this example), and the error mean square (6 in this case). Our example, run the function

m2ICC = myICC(Model.1, 3, 2,6); m2ICC

and R returns

[1] 0.9999396 0.9999350 0.9999442

with the ICC reported first, 0.9999396, followed by the lower limit (0.9999350) and the upper limit (0.9999442) of the 95% confidence interval.

In lieu of your own function, at several packages available for R will calculate the intraclass correlation coefficient and its variants. These packages are: irr, psy, psych, and rptR. For complex experiments involving multiple predictor variables, these packages are helpful for obtaining the correct ICC calculation (cf Shrout and Fleiss 1979; McGraw and Wong 1996). For the one-way ANOVA it is easier to just extract the information you need from the ANOVA table and run the calculation directly. We do so for a couple of examples.

Example: Are marathon runners consistent more consistent than my commute times?

A marathon is 26 miles, 385 yards (42.195 kilometers) long (World Athletics.org). And yet, tens of thousands of people choose to run in these events. For many, running a marathon is a one-off, the culmination of a personal fitness goal. For others, its a passion and a few are simply extraordinary, elite runners who can complete the courses in 2 to 3 hours (Table 3). That’s about 12.5 miles per hour. For comparison, my 20 mile commute on the H1 freeway on Oahu typically takes about 40 minutes to complete, or 27 miles per hour (Table 2, yes, I keep track of my commute times, per Galton’s famous maxim: “Whenever you can, count”).

Table 2. A sampling of commute speeds, miles per hour (mph), on the H1 freeway during DrD’s morning commute

| Week | Monday | Tuesday | Wednesday | Thursday | Friday |

| 1 | 28.5 | 23.8 | 28.5 | 30.2 | 26.9 |

| 2 | 25.8 | 22.4 | 29.3 | 26.2 | 27.7 |

| 3 | 26.2 | 22.6 | 24.9 | 24.2 | 34.3 |

| 4 | 23.3 | 26.9 | 31.3 | 26.2 | 30.2 |

Calculate the ICC for my commute speeds.

Run the one-way ANOVA to get the necessary mean squares and input the values into our ICC function. We have

require(psych) m2ICC = myICC(AnovaModel.1, 4, 4,11); m2ICC [1] 0.7390535 0.6061784 0.8719286

Repeatability, as estimated by the ICC, was 0.74 (95% CI 0.606, 0.872), for repeat measures of commute times.

We can ask the same about marathon runners — how consistent from race to race are these runners? The following data are race times drawn from a sample of runners who completed the Honolulu Marathon in both 2016 and 2017 in 2 to 3 hours (times recorded in minutes). In other words, are elite runners consistent?

Table 3. Honolulu marathon running times (min) for eleven repeat, elite runners.

| ID | Time |

|---|---|

| P1 | 179.9 |

| P1 | 192.0 |

| P2 | 129.9 |

| P2 | 130.8 |

| P3 | 128.5 |

| P3 | 129.6 |

| P4 | 179.4 |

| P4 | 179.7 |

| P5 | 174.3 |

| P5 | 181.7 |

| P6 | 177.2 |

| P6 | 176.2 |

| P7 | 169.0 |

| P7 | 173.4 |

| P8 | 174.1 |

| P8 | 175.2 |

| P9 | 175.1 |

| P9 | 174.2 |

| P10 | 163.9 |

| P10 | 175.9 |

| P11 | 179.3 |

| P11 | 179.8 |

Stacked worksheet, P refers to person, therefore P1 is person ID 1, P2 is person ID 2, and so on.

After running a one-way ANOVA, here are results for the marathon runners

m2ICC = myICC(Model.1, 2, 10,11); m2ICC [1] 0.9780464 0.9660059 0.9900868

Repeatability, as estimated by the ICC, was 0.98 (95% CI 0.966, 0.990), for repeat measures of marathon performance. Put more simply, knowing what a runner did in 2016 I would be able to predict their 2017 race performance with high confidence, 98%!

And now, we compare: the runners are more consistent!

Clearly this is an apples-to-oranges comparison, but it gives us a chance to think about how we might make such comparisons. The ICC will change because of differences among individuals. For example, if individuals are not variable, then xx too little variation.

Visualizing repeated measures

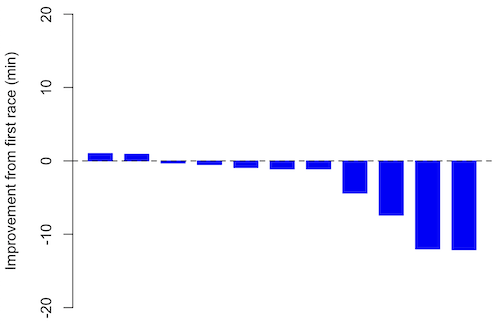

If just two measures, then a profile plot will do. We introduced profile plots in the paired t-test chapter (Ch10.3). For more than two repeated measures, a line graph will do (see question 9 below). Another graphic increasingly used is the waterfall plot (waterfall chart), particularly for before and after measures or other comparisons against a baseline. For example, it’s a natural question for our eleven marathon runners — how much did they improve on the second attempt of the same course? Figure 2 shows my effort to provide a visual.

Figure 1. Simple waterfall plot or race improvement for Table 3 data. Dashed horizontal line at zero.

R code

Data wrangling: Create a new variable, e.g., race21, by subtracting the first time from the second time. Multiply by -1 so that improvement (faster time in second race) is positive. Finally, apply descending sort so that improvement is on the left and increasingly poorer times are on the right.

barplot(base21, col="blue", border="blue", space=0.5, ylim=c(-20,20),ylab="Improvement from first race (min)") abline(h=0, lty=2)

From the graph we quickly see that few of the racers improved their times the second time around.

An example for you to work, from our Measurement Day

If you recall, we had you calculate length and width measures on shells from samples of gastropod and bivalve species. In the table are repeated measures of shell length, by caliper in mm, for a sample of Conus shells (Fig 2 and Table 4).

Figure 2. Conus shells, image by M. Dohm

Repeated measures of the same 12 Conus shells.

Table 4. Unstacked dataset of repeated length measures on 12 shells

| Sample | Measure1 | Measure2 | Measure3 |

|---|---|---|---|

| 1 | 45.74 | 46.44 | 46.79 |

| 2 | 48.79 | 49.41 | 49.67 |

| 3 | 52.79 | 53.45 | 53.36 |

| 4 | 52.74 | 53.14 | 53.14 |

| 5 | 53.25 | 53.45 | 53.15 |

| 6 | 53.25 | 53.64 | 53.65 |

| 7 | 31.18 | 31.59 | 31.44 |

| 8 | 40.73 | 41.03 | 41.11 |

| 9 | 43.18 | 43.23 | 43.2 |

| 10 | 47.1 | 47.64 | 47.64 |

| 11 | 49.53 | 50.32 | 50.24 |

| 12 | 53.96 | 54.5 | 54.56 |

Maximum length from from anterior end to apex with calipers. Repeated measures were conducted blind to shell id, sampling was random over three separate time periods.

Questions

- Consider data in Table 2, Table 3, and Table 4. True or False: The arithmetic mean is an appropriate measure of central tendency. Explain your answer.

- Enter the shell data into R; Best to copy and stack the data in your spreadsheet, then import into R or R Commander. Once imported, don’t forget to change Sample to character, otherwise R will treat Sample as ratio scale data type. Run your one-way ANOVA and calculate the intraclass correlation (ICC) for the dataset. Is the shell length measure repeatable?

- True or False. A fixed effects ANOVA implies that the researcher selected levels of all treatments.

- True or False. A random effects ANOVA implies that the researcher selected levels of all treatments.

- A clinician wishes to compare the effectiveness of three competing brands of blood pressure medication. She takes a random sample of 60 people with high blood pressure and randomly assigns 20 of these 60 people to each of the three brands of blood pressure medication. She then measures the decrease in blood pressure that each person experiences. This is an example of (select all that apply)

A. a completely randomized experimental design

B. a randomized block design

C. a two-factor factorial experiment

D. a random effects or Type II ANOVA

E. a mixed model or Type III ANOVA

F. a fixed effects model or Type I ANOVA - A clinician wishes to compare the effectiveness of three competing brands of blood pressure medication. She takes a random sample of 60 people with high blood pressure and randomly assigns 20 of these 60 people to each of the three brands of blood pressure medication. She then measures the blood pressure before treatment and again 6 weeks after treatment for each person. This is an example of (select all that apply)

A. a completely randomized experimental design

B. a randomized block design

C. a two-factor factorial experiment

D. a random effects or Type II ANOVA

E. a mixed model or Type III ANOVA

F. a fixed effects model or Type I ANOVA - The advantage of a randomized block design over a completely randomized design is that we may compare treatments by using ________________ experimental units.

A. randomly selected

B. the same or nearly the same

C. independent

D. dependent

E. All of the above - The marathon results in Table 2 are paired data, results of two races run by the same individuals. Make a profile plot for the data (see Ch10.3 for help).

- A profile plot is used to show paired data; for two or more repeat measures, use a line graph instead.

A. Produce a line graph for data in Table 4. The line graph is an option in R Commander (Rcmdr: Graphs Line graph…), and can be generated with the following simple R code:

lineplot(Sample, Measure1, Measure2, Measure3)

B. What trends, if any, do you see? Report your observations.

C. Make another line plot for data in Table 2. Report any trend and include your observations.

Chapter 12 contents

- Introduction

- The need for ANOVA

- One way ANOVA

- Fixed effects, random effects, and agreement

- ANOVA from “sufficient statistics”

- Effect size for ANOVA

- ANOVA posthoc tests

- Many tests one model

- References and suggested readings

6.2 – Ratios and probabilities

Introduction

Let’s define our terms. An event is some occurrence. As you know, a ratio is one number, the numerator, divided by another called the denominator. A proportion is a ratio where the numerator is a part of the whole. A rate is a ratio of the frequency of an event during a certain period of time. A rate may or may not be a proportion, and a ratio need not be a proportion, but proportions and rates are all kinds of ratios. If we combine ratios, proportions, and/or rates, we construct an index.

Ratios

Yes, data analysis can be complicated, but we start with this basic idea. Much of the statistics is based on frequency measures, e.g., ratios, rates, proportions, indexes, and scales.

Ratios are the association between two numbers, one random variable divided by another. Ratios are used as descriptors and the numerator and denominator do not need to be of the same kind. Business and economics are full of ratios. For example, Return on investment (ROI), eg, earnings equals net income divided by number of shares outstanding, the Price-Earnings, or P/E ratio, is the ratio of the price of a stock to the earnings per stock, as well as many others are used to summarize performance of a business, and to compare performance of one business against another.

Note 1: ROI also stands for region of interest — a sample in a data set identified by the analyst for a particular interest. Often refers to a specific area in an image.

Ratios are deceptively convenient way to standardize a variable for comparisons, i.e., how many times one number contains another. For example, when estimating bird counts for different areas, or different birding effort (intensity, time searched), we may correct counts by accounting for area in which counts were made or the total time spent counting, for a per-unit ratio (Liermann et al 2004).

Practice: There were 1,326 day undergraduate students enrolled in 2014 at Chaminade University of Honolulu and the Sullivan Library added 8469 new items (ebooks, journals, etc.,) to its collection during 2014. What is the ratio of new items per student?

![]()

Data collected from Chaminade University website at www.chaminade.edu on 3 July 2014.

Practice: For another example, what is ratio of annual institutional aid a student at Chaminade University may expect to receive compared to a student at Hawaii Pacific University?

![]()

Thus, in 2014, Chaminade offered more than twice the institutional aid to its students then does HPU (both institutions are private universities, data from retrieved on 3 July 2014 from www.cappex.com).

Fold-change

The ratio between two quantities, e.g., to compare mRNA expression levels of genes from organisms exposed to different conditions, researchers may report fold-change.

An example of calculation of fold change. Rates of the expression from cells exposed to heavy metal divided by expression under basal conditions. Gene expression under different treatments may be evaluated by calculating fold-change as the log base2 of the ratio of expression of a gene for one treatment divided by expression of same gene from control conditions. Copper is an essential trace element, but excess exposure to copper is known to damage human health, including chronic obstructive pulmonary disease. One proposed mechanism is that cell injury promotes an epithelial-to-mesenchyme shift. In a pilot study we investigated gene expression changes by quantitative real-time polymerase chain reaction (qPCR) in a rat lung Type II alveolar cell line exposed to copper sulfate compared to unexposed cells. Record cycle threshold values, CT, for each gene, where CT is number of cycles required for the fluorescent signal to exceed background levels; CT is inversely proportional to amount of cDNA (mRNA) in the sample. Genes investigated were ECAD, FOXC2, NCAD, SMAD, SNAI1, TWIST, and VIM, with ATCB as reference gene. ECAD expression is marker of epithelial cells, whereas FOXC2, NCAD, SNAI1, TWIST, and VIM expression marker of mesenchymal cells. After calculating ![]() values, geometric means of normalized values of three replicates each are shown in Table 1.

values, geometric means of normalized values of three replicates each are shown in Table 1.

Note 2: Logarithm transform was used because gene expression levels vary widely on the original scale and any log-transform will reduce the variability. log-base 2 specifically was used for fold-change in particular because it is easy to interpret and provides symmetry (all log-transforms provide this symmetry). For example, log(1/2, 2) returns -1, while log(2/1, 2) returns +1. Thus, base 2, we see a decrease by half or doubling of original scale is a fold change of ![]() . In contrast,

. In contrast, log(1/2, 10) returns -0.301, while log(2/1, 10) returns +0.301.

Table 1. Mean ![]() and fold change of gene expression values from qPCR for several genes from a rat lung cell line.

and fold change of gene expression values from qPCR for several genes from a rat lung cell line.

| Control | Copper-sulfate | Fold change | |

| ECAD | 34.6 | 35.7 | 0.6 |

| NCAD | 28.5 | 24.0 | 27.2 |

| SMAD | 29.5 | 25.0 | 28.2 |

| SNAI1 | 25.5 | 28.1 | 0.2 |

| FOXC2 | 27.6 | 27.0 | 1.9 |

| VIM | 23.1 | 16.4 | 134.4 |

| TWIST | 25.1 | 22.9 | 5.6 |

At face value, following four hour exposure to copper sulfate in media, some evidence that the epithelial cell line adopted gene expression profile of mesenchyme-like cells. However, weakness of fold-change is clear from Table 1: the quantity is sensitive to small values. ECAD expression in the cell line is low, thus high numbers of PCR cycles (mean = 36) and treated cells not much fewer (mean = 34.4).

Note 3: Calculation of ![]() is included. Geomean CT were

is included. Geomean CT were

| Control | CuSO4 | |

| ACTB | 32.2 | 32.5 |

| NCAD | 28.5 | 24.0 |

For control cells, ![]()

For treatment cells, ![]()

and ![]()

log2, ![]()

table value differs by rounding

Rates

Rates are a class of ratios in which the denominator is some measure of time. For example, the four year graduation rate of some Hawaii universities are shown in Table 2.

Table 2. Percent students graduation with bachelor’s degrees within four years or six years (cohort 2014, data source NCES.ed.gov)

| School | Private/Public | Four-year, Percent graduation |

Six-year, Percent graduation |

| Chaminade University | Private (non-profit) | 43 | 58 |

| Hawaii Pacific University | Private (non-profit) | 31 | 46 |

| University of Hawaii – Hilo | Public | 15 | 38 |

| University of Hawaii – Manoa | Public | 35 | 62 |

| University of Hawaii – West Oahu | Public | 16 | 39 |

| University of Phoenix | Private (for profit) | 0 | 19 |

Examples of rates

Rates are common in biology. To name just a few

- Basal metabolic rate (BMR), often measured by indirect calorimetry, reported in units kilo Joules per hour.

- Birth and death rates, components of population growth rate.

- Phred quality score, error rates of incorrectly called nucleotide bases by the sequencer

- Growth rate, which may refer to growth of the individual (somatic growth rate), or increase of number of individuals in a population per unit time

- Molecular clock hypothesis, rate of amino acid (protein) or nucleotide (DNA) substitution is approximately constant per year over evolutionary time.

Proportions

Proportions are also ratios, but they are used to describe one part to the whole. For example, 902 women (self-reported) day undergraduate students enrolled in 2014 at Chaminade University in Honolulu, Hawaii.

Practice: Given that the total enrollment for Chaminade in 2014 was of 1,326, calculate the proportion of female students to the total student body.

![]()

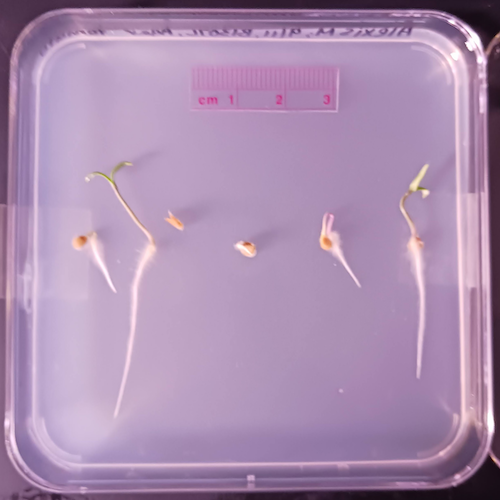

More practice: A common statistic in agriculture is germination percentage, count the number of seeds that sprout over a period of time divided by total number of seeds planted multiplied by 100. Figure 1 shows an example of a germination trial for five tomato seeds. Seed in position 3 (left to right 1 – 5) failed to germinate, whereas the other four seeds display range of seedling growth. (A seed coat is observed next to plant 2.)

Figure 1. Example planting of five tomato seeds, day 5, on agar Petri dish (M. Dohm).

Observations conducted over 10 days. Germination defined as evidence of radicle by day 10. Data collected by student for biostatistics project. A random sample of results from three plates is presented in Table 3.

Table 3. Germination of tomato seeds on agar Petri dishes.

| Plate* | 1 | 2 | 3 | 4 | 5 |

| 18 | Yes | No | Yes | Yes | Yes |

| 13 | Yes | Yes | Yes | No | Yes |

| 20 | No | Yes | Yes | Yes | Yes |

* Random sample of three plates drawn from among 30 plates.

We calculate the percent germination for the 15 seeds planted as

![]()

Thus, for the sample of plates the germination percent was 80%, which, coincidently, is the same result if germination percent was calculated for the five seeds displayed in Figure 1.

Comparing proportions

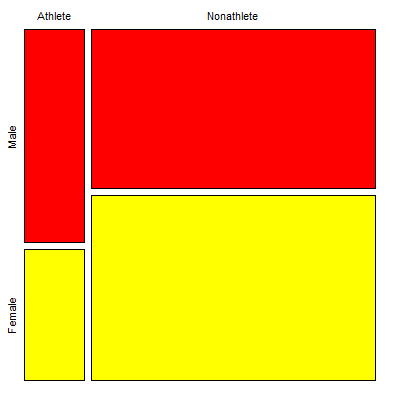

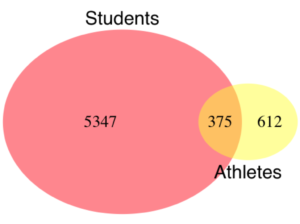

In some cases you may wish to compare two proportions or two ratios. The hypothesis tested is the difference between the two ratios, and the test is if the confidence interval of the difference includes zero. If it does, then we would conclude there is no statistical difference between the two proportions. In R, use the prop.test function. For example, 63 women were on team sport rosters at Chaminade in 2014, a proportion of 59% of all student athletes (n = 106). Recall from the example above that women were 68% of all students at Chaminade University. Title IX compliance requires that a university “maintain policies, practices and programs that do not discriminate against anyone on the basis of gender” (NCAA, http://www.ncaa.org/about/resources/inclusion/title-ix-frequently-asked-questions). In terms of athletic programs, then, universities are required to provide participation opportunities for women and men that are substantially proportionate to their respective rates of enrollment of full-time undergraduate students (NCAA, http://www.ncaa.org/about/resources/inclusion/title-ix-frequently-asked-questions)

Consider Chaminade University: Is there a statistical difference between proportion of women athletes and their proportion of total enrollment? We introduce statistical inference in Chapter 8, but for now, this is a test of the null hypothesis that the difference between the two proportions is zero.

At the R prompt type (remember, anything after the # sign is a comment and ignored by R).

women = c(62,902) #where 62 is the number of women athletes and 902 is the number of women students students = c(106,1326) #106 is the number of student athletes and 1326 is all students prop.test(women,students) #the default is a two-tailed test, i.e., no group differences

And R returns

2-sample test for equality of proportions with continuity correction data: women out of students X-squared = 3.6331, df = 1, p-value = 0.05664 alternative hypothesis: two.sided 95 percent confidence interval: -0.197532407 0.006861073 sample estimates: prop 1 prop 2 0.5849057 0.6802413

What is the conclusion of the test?

When you compare two groups, you’re asking whether the two groups are equal (the null hypothesis). Mathematically, that’s the same as saying the difference between the two groups is equal to zero.

First check the lower and upper limits of the confidence interval. A confidence interval is one way to report a range of plausible values for an estimate (see Ch 7.6 – Confidence intervals). It’s called a confidence interval because a probability is assigned to the range of values; a 95% confidence interval is interpreted as we’re 95% certain the true population value is somewhere between the reported limits. For our Chaminade University Title IX question, recall that we are asking whether the value of zero is included. The lower limit was -0.1975 and some change; the upper limit was 0.0068 and some change. Thus, zero is included and we would conclude that there was no statistical difference between the two proportions.

The second relevant output to look at is the p-value, or probability value. If the p-value is less than 5%, we typically reject the tested hypothesis. We will talk more about p-values and their relationship to inference testing in Chapter 8; for now, pay attention to the confidence interval (introduced in Chapter 3.4); if zero is included, then we conclude no substantial differences between the two proportions.

Relative change

It’s common practice to report on the amount of change between two quantities recorded over time. Relative change in root length b

To make percent increase (decrease), simply multiple 100%.

(1) ![]()

Indexes

Indexes are composite statistics that combine indicators. Indexes are common in business and economics, eg, Dow Jones Industrial average combines stock prices from 30 companies listed on the New York Stock Exchange and the CPI, or Consumer Protection Index, constructed by the US Bureau of Labor Statistics from a “market basket of consumer goods and services, used by the government and other institutions to track inflation. The CPI itself is made up of numerous indexes to account for regional price differences and other factors.

Some indexes presented in this book include

- Grade point average

- Body Mass Index (BMI)

- Comet assay indexes (tail intensity, tail length, tail moment) are used to assess DNA damage among organisms exposed to environmental contaminants (e.g., Mincarelli et al., 2019).

- Encephalization index, ratio of brain to body weight among species. Used to compare cognitive abilities.

Scales

Agreement scales for surveys, e.g., Likert scale or sliding scale (Sullivan and Artino 2013). For example, after learning about Theranos, students were asked:

How serious is this violation in your opinion?

| Not serious

0 |

Slightly serious

0 |

Moderately serious

2 |

Serious

4 |

Very serious

19 |

a 5-point scale.

Although an intuitive measure, how fast can an individual run, challenging to determine whether individual’s performance is at physiological maximum. Particularly for measures of performance capacity that involves behavior (motivation), may apply a race quality scale (eg., binary scale “good” or “bad” Husak et al 2006).

These examples reflect ordinal scales. Many of the nonparametric tests discussed in Chapter 15 are suitable for analysis of scales.

Limitations of ratios

Although the indexes may be easy to communicate, statistically, indexes have many drawbacks. Chief among these, variation in ratios may be due to change in numerator or denominator. Ratios and any index calculated by combining ratios seem simple enough, but have complicated statistical properties. Over the years, several authors have made critical suggestions for use of ratios and indexes. Some key references are Packard and Boardman (1988), Jasienski and Oikos (1999), Nee et al (2005), and Karp et al (2012). For example, ratios, computing trait value by body weight, are often used to compare some trait among individuals or species that differ in body size. However, this normalization attempt only removes the covariation between size and the trait if there is a 1:1 relationship between size and the trait. More typically, relationship between the trait and body size is allometric, i.e., the slope is not equal to one. Thus, ratio will over-correct for large size and under-correct for small size. The proper solution is to conduct the comparison as part of an analysis of covariance (ANCOVA, see Chapter 17.6).

Example

Which is the safer mode of travel: car or airplane?

The following discussion covers travel safety in the United States of America for a typical year, 2000*.

Note 4: *The following discussion excludes the 241 airline passenger deaths associated to the terrorist attacks of September 11 2001 in the USA; the NTSB also “…exclude(s illegal acts) for the purpose of accident rate computation. It also does not include considerations of 2020-2021 and effects of the COVID-19 pandemic on numbers of flights. The purpose of this discussion is not to convince you about the safety of modes of travel. Moreover, the following analysis is not necessarily the proper way to frame or analyze risk, but, rather, the purpose of this discussion is to highlight the impact of assumptions on estimating risk.

Between 2000 and 2023, there were 779 deaths associated with accidents of major air carriers in the USA. Year 2009 was the last multiple-casualty crash of a major U.S. carrier (Colgan Air Flight 3407); between 2010 and 2021, two fatal accidents, two fatalities were reported.

We’ve all heard the claim that it’s much safer to fly with a major airline than it is to travel by car (e.g., 1 January 2012 article in online edition of San Francisco Chronicle). There are a variety of arguments, but one statistical argument goes as follows. In 2000 in the United States, 638,902,993 persons traveled by major air carrier, whereas there were 190,625,023 licensed drivers. In 2000, 92 persons died in air travel (again, major carriers only), whereas 37,526 persons died in vehicle crashes (includes drivers and passengers). Thus, the risk of dying in air travel is given as the proportion ![]() , or 1.44e-07 (0.000014%), whereas the comparable proportion for death by motor vehicle is

, or 1.44e-07 (0.000014%), whereas the comparable proportion for death by motor vehicle is ![]() , or 1.97e-04 (0.0197%).

, or 1.97e-04 (0.0197%).

In other words, we can expect one death (actually 1.4) for every ten million airline passengers, but 20 deaths (actually 19.7) for every one hundred thousand licensed drivers. Thus, flying is a thousand times safer than driving (actual result 1,367 times; divide the rate of motor vehicle-caused deaths for licensed drivers by the rate for airlines). Proportions are hard to compare sometimes, especially when the per capita numbers differ (ten million vs. 100,000 in this case).

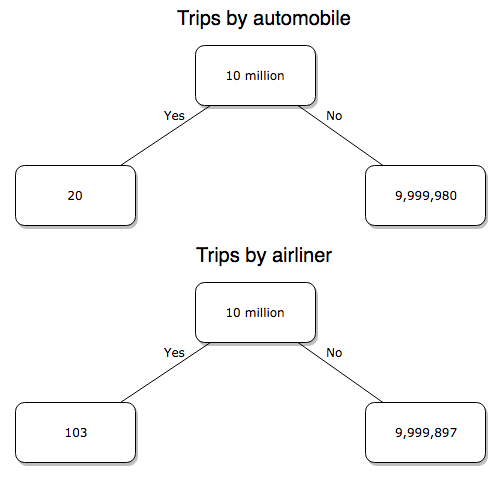

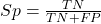

We can put the numbers onto a probability tree and get a sense of what we are looking at (Fig 2).

Figure 2. A probability tree to help visualize comparison of deaths (“yes”) by car travel and by airline travel in the United States for the year 2000.

Comparing rates and proportions

Without going into the details, we will do so in Chapter 9 Inferences on Categorical Data, comparing two rates is a chi-square, χ2, contingency table type of problem. More specifically, however, it is a binomial problem (Chapter 3.1, Chapter 6.5); there are two outcomes, death or no death, and we can describe how likely the event is to occur as a proportion. Because the numbers are large, we can use rely on the normal distribution for comparing the two proportions. We’ll explain this more in the next chapters, but for now it may be enough to present the equation for the comparison of two proportions under the assumption of normality, proportion z test.

and the null hypothesis (see Chapter 8) tested as that the two proportions are equal. This may be written as

H0 : p1-p2=0

We can assign statistical significance to the differences in events for the two modes of travel under this set of assumptions. Rcmdr has a nice menu-driven system for comparing proportions, but for now I will simply list the R commands.

At the R prompt type each line then submit the command.

total = 100000000 prop.test(c(19700,14),c(total,total)))

And the R output

prop.test(c(19700,14),c(total,total)) 2-sample test for equality of proportions with continuity correction data: c(19700, 14) out of c(total, total) X-squared = 19658, df = 1, p-value < 2.2e-16 alternative hypothesis: two.sided 95 percent confidence interval: 0.0001940984 0.0001996216 sample estimates: prop 1 prop 2 1.97e-04 1.40e-07

There’s a bit to unpack here. R is consistent; when it reports results of a statistical test, it typically returns the value of the test statistic (χ2 = 19658), the degrees of freedom for the test (df = 1), and the p-value (< 2.2e-16).

prop.test() were calculated by the Wilson Score method not the Wald method. While both are parametric tests and therefore sensitive to departures from normality (see Chapter 13.3), formulation of Wilson score method makes fewer assumptions (involving approximations of the population proportions) and therefore is considered more accurate.By convention in statistics, if a p-value, where “p” stands for probability, is less than 5%, we would say that our results are statistically significant from the null hypothesis. Looks pretty convincing to me, the difference of 19,700 deaths compared to 14 deaths is clearly different by any criterion and by the results of the statistical test, the p-value is several orders of magnitude smaller than 5%.

Safer to fly. By far, not even close. And similar conclusions would be reached if we compare different years, or averages over many years, or if we used a different way to express the amount of travel (e.g., miles/year) by these modes of transportation.

Are you convinced, really? Is it safer to fly?

Let’s try a little statistical reasoning — what assumptions did I make to do these calculations? We recognize immediately that many more people travel by car: that there are way more cars being driven then there are airline planes being flown. The question then is, have we properly adjusted for this difference? Here are a few considerations. My source for the numbers is the NTS 2001 book published by the U.S. Department of Transportation (www.dot.gov). We are conducting a risk analysis, and the first step is to make sure that we are comparing “apples with apples.” Here are two alternative solutions that at least compare, “Red Delicious” apples with “Macintosh” apples.

Option 1. There are many, many more licensed drivers than there are licensed commercial airline pilots. The standard comparison offered in the background above compared deaths per licensed car driver, but a different metric for air travel, the rate per passenger. This isn’t as bad of a comparison as it may seem — after all, the majority of deaths in car accidents are of the driver themselves. But it isn’t that hard to make the direct comparison — just find out how many commercial pilots there are — a direct comparison with licensed car drivers (stated above as 190,625,023). From the FAA we see that in 2009 there were 125,738 persons with commercial certificates. Since there are only 20 major airline carriers in the United States now (a few more were active in 2000, but we’ll put this aside), the number of licenses is an over estimate of the actual number we want — how many pilots of commercial airlines — but lets use this number for starters — after all, just because a person has a drivers license doesn’t mean they drive or ride in a car.

Number of deaths/yr: Let’s use 2000 data, a typical year prior to 9/11 (and excluding the Covid-19 pandemic). Airlines: 92 deaths; motor vehicles (includes passenger cars, trucks, etc., but not motorcycles): 37,526 deaths (drivers = 25,567; passengers = 10,695; 86 others).

Which mode of travel is riskier? I get a rate a rate of 7.3 X 10-4 deaths per commercial pilot, or

92125738

compared to a rate for car drivers of 1.97 X 10-4 deaths.

To summarize what we have so far, I get a result that suggests car travel is almost four times safer

7.3 x 10-41.97 x 10-4→7.31.97 = 3.7

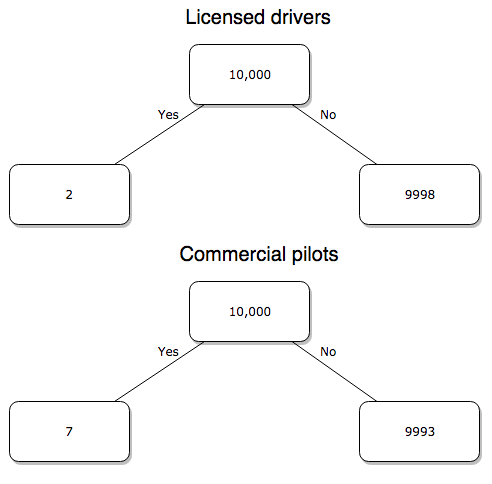

then traveling by commercial airliner. In whole numbers, these results translate to seven deaths for every 10,000 commercial pilots compared to two deaths for every 10,000 licensed car drivers (Fig 3).

Figure 3. Comparing totals of deaths adjusted by numbers of licensed drivers and by licensed commercial airline pilots in the United States.

R work follows. Enter and submit each command on a separate line in the script window

total = 10000 prop.test(c(2,7),c(total,total))

And the R output

prop.test(c(2,7),c(total,total)) 2-sample test for equality of proportions with continuity correction data: c(2, 7) out of c(total, total) X-squared = 1.7786, df = 1, p-value = 0.1823 alternative hypothesis: two.sided 95 percent confidence interval: -0.001187816 0.000187816 sample estimates: prop 1 prop 2 2e-04 7e-04

What’s happened? The p-value (0.1823) is not less than 5% and so we would conclude under this scenario that there is no difference between the proportions of deaths between the two modes of travel. Let’s keep going.

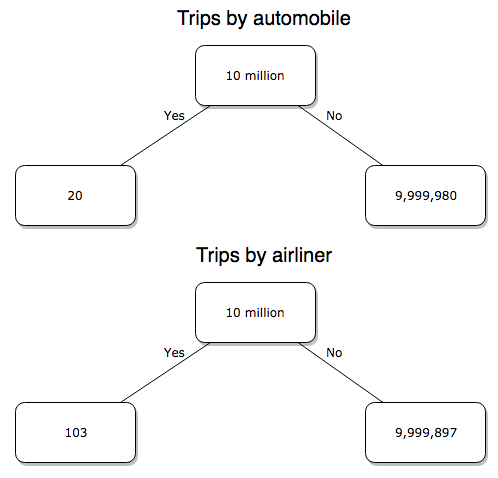

Option 2. There are many, many more cars on the road then there are airplanes flying commercial passengers. The standard comparison offered in the background information above identified death rates per individual driver, but used a different metric for airline travelers (number of deaths per passenger), which confuses individuals with travelers: what we need is the number of individuals that traveled by airliner, not the total number of passengers (which is many times higher, because of repeat flyers). How can we make a fair comparison for the two modes of travel? Most people never fly whereas most people drive (or ride in a car) frequently in the United States. To me, risk of travel might be better expressed in terms of a per trip rate. I want to know, what are my chances of dying each time I get into my car versus each time I fly on a commercial jet in the United States?

Number of trips/yr. For airlines, I use the number of departures (2000 = 8,951,773). But for cars, we need to decide how to get a similar number. It’s not available directly from the DOT (and would be difficult to get — studies with randomly selected drivers can yield as many as 5 trips per day for licensed drivers). I took the number of licensed drivers and bound the problem — at the low end, let’s say that only 2 trips per week (e.g., 50 weeks) are taken by licensed drivers (100 trips); at the upper end, let’s take 2 trips per day per week, or 500 trips/year. Thus, at the low end, we have 1.91 X1010 trips per year; at the upper end, 9.53 X 1010 trips per year.

Which mode of travel is riskier? Using the number of deaths/yr listed above in Option 1, I get a rate of 1.03 X 10-5 deaths/trip for air carriers

928.95 x 106

compared to a rate of 1.97 X 10-6 deaths/trip for cars (lower bound) or 3.9 X 10-7 deaths/trip for cars (upper bound). Here’s what the numbers look for in a tree (taking the lower number of trips per year for cars, Fig 4).

Figure 4. Comparing totals of deaths adjusted by numbers of car trips and by numbers of airline trips in the United States.

R work follow

total = 10000000 prop.test(c(20,103),c(total,total))

And the R output

prop.test(c(20,103),c(total,total)) 2-sample test for equality of proportions with continuity correction data: c(20, 103) out of c(total, total) X-squared = 54.667, df = 1, p-value = 1.428e-13 alternative hypothesis: two.sided 95 percent confidence interval: -1.057370e-05 -6.026305e-06 sample estimates: prop 1 prop 2 2.00e-06 1.03e-05

Now we have another really small p-value (1.428e-13), which suggests a statistically significant difference between the modes of air travel, but the difference in deaths is switched. I now have a result that suggests car travel is much safer then traveling with a commercial airliner! These calculations suggest that you are as much as 26 (upper bounds, five times for lower bounds) times more likely to die from a plane crash then you are behind the wheel. In whole numbers, these results indicate one death for every 100,000 airline flights compared to 1 death for every 500,000 (lower estimate) or 2,500,000 car trips!

Do I have it right and the standard answer is wrong? As Lee Corso says often on ESPN’s College GameDay program, “Not so fast, my friend!” (Wikipedia). Mark Twain was right to hold the skeptic’s view. Begin by listing the assumptions and by checking the logic of the comparisons (there are still holes in my logic!!). For one, if I am considering my risk of dying by mode of travel, it is far more likely that I will be in a car accident than I will an airline accident, simply because I don’t travel by airline that much. When we consider lifetime risk, we can see why the assertion that it is “safer to fly than drive” is true — we’re far more likely to belong to one of the reference populations involving automobiles (e.g., those who drive frequently, for many years) than we are to be among the frequent flyers reference populations.

Questions

1. Review and provide your own examples for

- index

- rate

- ratio

- proportion

2. Return to my story about travel safety, airlines vs cars: am I using “statistic” or “statistics?”

3. Like travel safety, we are often confronted by risk comparisons like the following: Which animal is more deadly to humans, dogs or sharks? Between the two, which lead to more hospitalizations in the United States? Work through your assumptions and use results from the International Shark Attack file.

- If a person lives in Nebraska, and never visits the ocean, how does a “shark attack” risk analysis apply? Is it a fair comparison to make between dog attacks and shark attacks? Why or why not.

4. Go to cappex.com/colleges and update institutional (gift) aid offered by Chaminade and HPU. Compare to University of Hawaii-Manoa.

5. Calculate percent increase for

- Annual atmospheric CO2 1980 and 2022.

Chapter 6 content

- Introduction

- Some preliminaries

- Ratios and probabilities

- Combinations and permutations

- Types of probability

- Discrete probability distributions

- Continuous distributions

- Normal distribution and the normal deviate (Z)

- Moments

- Chi-square distribution

- t distribution

- F distribution

- References and suggested readings

8.3 – Sampling distribution and hypothesis testing

Introduction

Understanding the relationship between sampling distributions, probability distributions, and hypothesis testing is the crucial concept in the NHST — Null Hypothesis Significance Testing — approach to inferential statistics. is crucial, and many introductory text books are excellent here. I will add some here to their discussion, perhaps with a different approach, but the important points to take from the lecture and text are as follows.

Our motivation in conducting research often culminates in the ability (or inability) to make claims like:

- “Total cholesterol greater than 185 mg/dl increases risk of coronary artery disease.”

- “Average height of US men aged 20 is 70 inches (1.78 m).”

- “Species of amphibians are disappearing at unprecedented rates.”

Lurking beneath these statements of “fact” for populations (just what IS the population for #1, for #2, and for #3?) is the understanding that not ALL members of the population were recorded.

How do we go from our sample to the population we are interested in? Put another way — How good is our sample? We’ve talked about how “biostatistics” can be generalized as sets of procedures you use to make inferences about what’s happening in populations. These procedures include:

- Have an interesting question

- Experimental design (Observational study? Experimental study?)

- Sampling from populations (Random? Haphazard?)

- Hypotheses: HO and HA

- Estimate parameters (characterize the population)

- Tests of hypotheses (inferences)

We have control of each of these — we choose what to study, we design experiments to test our hypotheses…We have already introduced these topics (Chapters 6 – 8).

We also obtain estimates of parameters, and inferential statistics applies to how we report our descriptive statistics (Chapter 3). Estimates of parameters like the sample mean and sample standard deviation can be assessed for accuracy and precision (e.g., confidence intervals).

Sampling distribution

Imagine drawing a sample of 30 from a population, calculating the sample mean for a variable (e.g., systolic blood pressure), then calculating a second sample mean after drawing a new sample of 30 from the same population. Repeat, accumulating one estimate of the mean, over and over again. What will be the shape of this distribution of sample means? The Central Limit Theorem states that the shape will be a normal distribution, regardless of whether or not the population distribution was normal, as long as the sample size is large (i.e., Law of Large Numbers). We alluded to this concept when we introduced discrete and continuous distributions (Chapter 6).

It’s this result from theoretical statistics that allows us to calculate the probability of an event from a sample without actually carrying out repeated sampling or measuring the entire population.

A worked example

To demonstrate the CLT we want R to help us generate many samples from a particular distribution and calculate the same statistic on each sample. We could make a for loop, but the replicate() function provides a simpler framework. We’ll sample from the chi-square distribution. You should extend this example to other distributions on your own, see Question 5 below.

Note 1: This example is much simpler to enter and run code in the script window, adjusting code directly as needed. If you wish to try to run this through Rcmdr, you’ll need to take a number of steps, and likely need to adjust the code and rerun anyway. Some of the steps in would be Rcmdr: Distributions → Continuous distributions → Chi-squared distribution → Sample from chi-square distribution…, then running Numerical summaries and saving the output to an object (e.g., out), extracting the values from the object (e.g., out$Table, confirm by running command str(out)— str() is an R utility to display the structure of an object), then testing the object for normality Rcmdr: Statistics → Test of normality, select Shapiro-Wilk, etc.. In other words, sometimes a GUI is a good idea, but in many cases, work with the script!

Generate x replicate samples (e.g., x = 10, 100, 1000, one million) of 30 each from chi-square distribution with one degree of freedom, test the distribution against null hypothesis (assume normal distributed, e.g., Shapiro-Wilk test, see Chapter 13.3), then make a histogram (Chapter 4.2).

x.10 <- replicate(10, { my.mean <- rchisq(30, 1) mean(my.mean) }) normalityTest(~x.10, test="shapiro.test") hist(x.10, col="orange")

Result from R

Shapiro-Wilk normality test

data: x.10

W = 0.87016, p-value = 0.1004

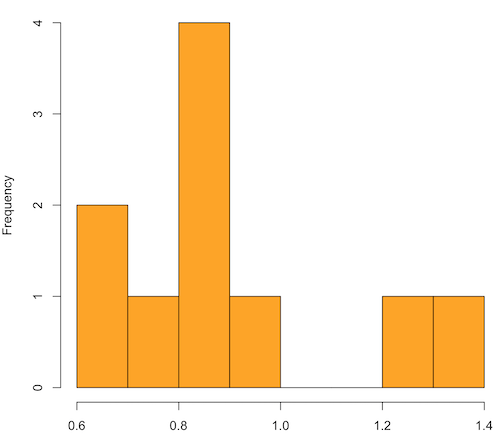

Figure 1. means of ten replicate samples drawn at random from chi-square distribution, df = 1.

Modify the code to draw 100 samples, we get

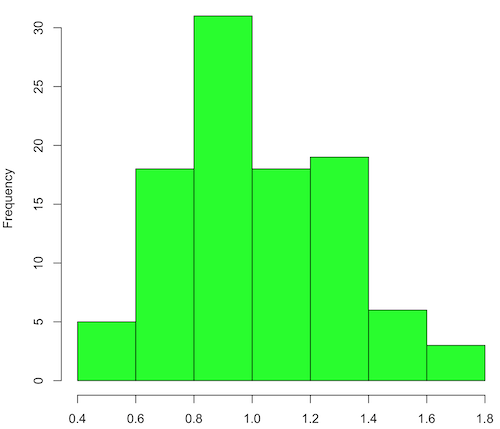

Figure 2. means of 100 replicate samples drawn at random from chi-square distribution, df = 1. Results from Shapiro-Wilks test: W = 0.97426, p-value = 0.04721

And finally, modify the code to draw one million samples, we get

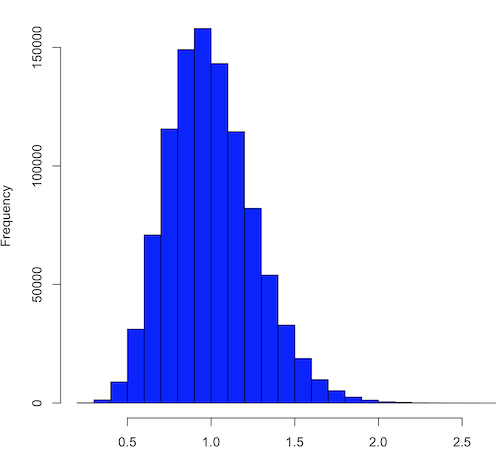

Figure 3. means of one million replicate samples drawn at random from chi-square distribution, df = 1. Normality test will fail to run, sample size of 5000 limit.

How to apply sampling distribution to hypothesis testing

First, a reminder of some definitions.

Estimate = we will always (almost) concern ourselves with how good our sample mean (such values are called estimates) is relative to the population mean, the thing we really want, but can only hope to get an estimate of.

Accuracy = how close to the true value is our measure?

Precision = how repeatable is our measure?

How can we tell if we have a good estimate? We want an estimate with an evaluation for accuracy and for precision. The sampling error provides an assessment of precision, whereas the confidence interval provides a statement of accuracy. We need an estimate of the sampling error for the statistic,

Sample standard error of the mean

We introduced sample error of the mean in section 3.4 of Chapter 3. Everything we measure can have a corresponding statement about how accurate (sampling error) is our estimate! First, we begin by asking, “how accurate is the mean that we estimate from a sample of a population?” How do we answer this? We could prove it in the mathematical sense of proof (and people have and do) OR we can use the computer to help. We’ll try this approach in a minute.

What we will show relates to the standard error of the population mean (SEM) or

![]()

, whose equation is shown below.

![]()

or equivalently, from the standard deviation we have

![]()

Note that the SEM takes the variance and divides through by the sample size. In general, then, the larger the sample size, the smaller the “error” around the mean. As we work through the different statistical tests, t-tests, analysis of variance, and related, you will notice that the test statistic is calculated as a ratio between a difference or comparison divided by some form of an error measurement. This is to remind you that “everything is variable.”

A note on standard deviation (SD) and standard error of the mean (SEM): SD estimates the variability of a sample of Xi‘s whereas SEM estimates the variability of a sample of means.

Let’s return to our thought problem and see how to demonstrate a solution. First, what is the population? Second, can we get the true population mean?